Understanding the LangChain HumanMessage class for LLMs

Sep 2, 2024.

In conversational AI and large language models, managing different types of messages is crucial.

LangChain, a popular framework for building applications with LLMs, provides several message classes to help developers structure their conversations effectively. Among these, the HumanMessage is the main one.

In this blog, we'll dive deep into the HumanMessage class, exploring its features, usage, and how it fits into the broader LangChain ecosystem. We'll also discuss how Lunary can provide valuable analytics to optimize your LLM applications.

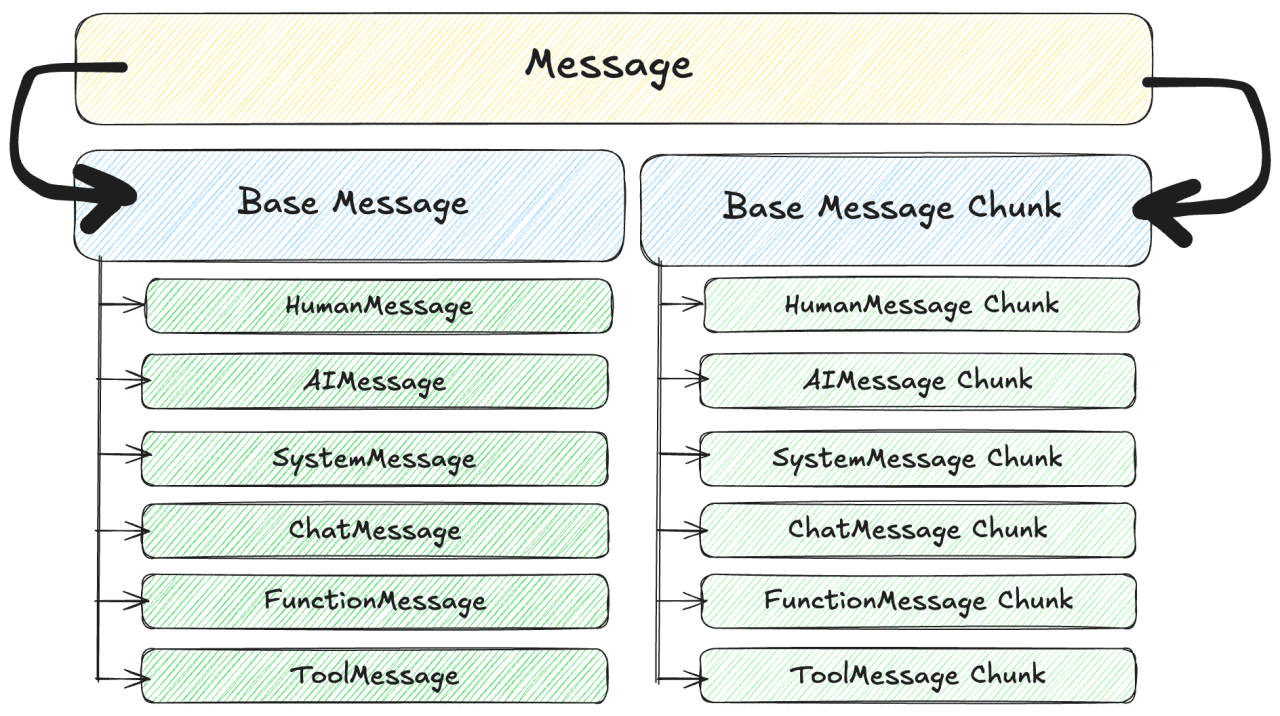

MessageClass in Langchain

The following classes extend the BaseMessage class to create several specialized message types:

- SystemMessage: Used for providing instructions or context to the AI system.

- HumanMessage: Represents input from a human user.

- ChatMessage: A general-purpose message type for various interactions.

- ToolMessage: Used to capture the results of tools invoked by the AI responses

- AIMessage: Contains responses generated by an AI model.

Each of these message types inherits from BaseMessage but may have additional properties or methods specific to its role. Here are the parameters that are supported while using the above classes

| Parameter/Method | Description | Type/Default |

|---|---|---|

| content | Core message content passed as a positional argument. | str (Required) |

| kwargs | Optional additional fields for message customization. | dict (Optional) |

| additional_kwargs | Extra payload data (e.g., tool calls) related to the message, useful for AI-based responses. | dict (Optional) |

| id | A unique identifier for the message, typically provided by the model or service generating the message. | str (Optional) |

| name | An optional human-readable label for the message, which can enhance clarity in some cases. | str (Optional) |

| response_metadata | Metadata related to the response, such as headers, log probabilities, and token counts. | dict (Optional) |

| type | Defines the message type used for serialization, defaulting to 'system' in SystemMessage, 'human' in HumanMessage and so on. | Literal |

| pretty_print() | Displays the message in a well-organized, readable format. | None (Method) |

| pretty_repr(html=False) | Returns a nicely formatted string representation of the message, optionally in HTML format. | str (Method) |

html (in pretty_repr) | Specifies whether the formatted message should be wrapped with HTML tags. | bool (Default = False) |

Let’s take a very easy example to clearly understand the scope of such message types.

-

SystemMessage: "You are a travelling assistant. Provide details to user for their travel related queries for international trips."

-

HumanMessage: "Can you suggest me some countries to visit specially during winters ?"

-

AIMessage: "Consider Switzerland, Canada, and Japan for winter travel."

These different message types help the AI understand exactly what's going on in a conversation. They make it possible for AIs to do complex tasks and have smart conversations. By using these messages, developers can create amazing things like virtual assistants, homework helpers, or even AI characters in games!

Importance of HumanMessage class

For conversational AI applications, understanding the roles of different participants is really important for generating appropriate and contextually relevant responses. The HumanMessage class in LangChain is important in this process by indicating that a message comes from a human user.

The AI models takes message requests as input from the application code. Each message object has a role (either system, user, or assistant) and content. The trigger point for any AI application in most case is the user input, So properly tagging messages with roles like HumanMessage ensures that the model can track the flow of conversation accurately.

A simple example of how message classes translates the request into the openAI object for let's say a customer support could be as shown below

System: You are a customer support agent. Please assist the user with their queries.

User: How can I reset my password?

Support Agent: To reset your password, please follow these steps: ...

Translation of the above conversation in OpenAI Request Model

[{"role": "system","content": "You are a customer support agent. Please assist the user with their queries."},{"role": "user","content": "How can I reset my password?"},{"role": "assistant","content": "To reset your password, please follow these steps: ..."}]

To better understand HumanMessage and how to use it in your LLM applications, We're aiming to build a chatbot that can assist us as a guide for travelling.

To achieve this, we'll leverage the HumanMessage class for user facing prompts and integrate it with a OpenAI Model.

Project setup

Create a new folder and initialize the environment to work on your projects.

Make sure you are using Python version >=3.10 (recommended 3.12)

mkdir travel_consultant_aipipenv --python3.12pipenv shelltouch chatbot.pypipenv install langchain lunary

Alright, Now that we have made up with the base setup of our files and directories, We will go straight into writing a small functionality with the HumanMessage class and use OpenAI model for generating responses

from langchain_core.messages import HumanMessagefrom langchain_openai import ChatOpenAImessage = HumanMessage(content="Hello, Where can I go on vacation?")chat = ChatOpenAI(api_key=YOUR_API_KEY)response = chat([message])print(response)

This shall print some response into the terminal logs and that should verify the working of our setup code. So now let's make our program a bit more clear and write some functional code by adding roles of different message types.

Travel Guide Assistant

In this guide, We'll build a conversational AI that understands and responds to travel-related queries.

from langchain_core.messages import HumanMessage, SystemMessage, AIMessagefrom langchain_openai import ChatOpenAI# Initialize the chat modelchat = ChatOpenAI(api_key=YOUR_API_KEY)# Define the system messagesystem_message = SystemMessage(content="You are a helpful assistant that provides travel advice.")# Initialize message list with system messagemessages = [system_message]# Trigger the chat model to generate the responsedef AIResponseAgent(messages):response = chat.invoke(messages)return response# Collect user input for the conversationwhile True:user_input = input("Enter your message (type 'exit' to end): ")if user_input.lower() == "exit":break# Add HumanMessage to the messages listmessages.append(HumanMessage(content=user_input))# Invoke the chat model with the current message historyresponse = AIResponseAgent(messages)# response = chat.invoke(messages)# # Print the AI's response and add it to the messages list# ai_response = response.contentprint("AI:", response.content)messages.append(AIMessage(content=response.content))# Inform the user that the conversation has endedprint("Conversation ended.")

The main reason for stacking messages (i.e., maintaining a list of messages) in a list is to preserve the context of the conversation. Each message added to the conversation history helps the model understand the flow of dialogue and respond accordingly based on the previous responses. Without stacking messages, the model would only consider the most recent input and output, potentially losing important context from earlier in the conversation.

From the list of messages, It's important to identify which message was a user prompt because basically the goal is to answer to what user is asking and taking support from previously answered responses generated by AI.

Hence from the conversation history, The HumanMessage type helps us to separate the user prompts and it can efficiently help LLM focus on those queries.

Awesome, So we have just created a functional travel guide assistant application using OpenAI. Now, after creating these AI chats how do we know if they're working well in real time and users are actually getting the response that they might expect?

Observe your AI Application performance with Lunary

While HumanMessage provides a structured way to represent user input, Lunary offers a powerful tool to observe and understand how your LLM is processing that input and responding to it.

This information is essential for optimizing your application's performance, improving user experience, and ensuring that your chatbot is providing the most accurate and helpful responses or knowing if it throws any errors to users which may not be expected.

We can use it to see how well the AI understands different HumanMessage prompts or even spot any hiccups in the conversation which will eventually help make the AI's responses even more helpful and fun as we keep optimizing it.

Moreover, It can also help with

- Performance Monitoring: Track how different messages and inputs affect the performance of your LLM.

- Error Analysis: Identify and troubleshoot issues with responses generated by the model.

- Optimization: Gain insights into how different prompt structures and message types impact the quality of responses.

- User Insights: Understand user interactions and improve the user experience based on real-time data.

So for getting all the insights for your application, Sign up for a Lunary account at https://app.lunary.ai/signup. Upon registration, you'll receive access to your dashboard and API keys. Once you have your API key, you can integrate Lunary into your project.

Here's a basic example of how to set up Lunary into any project

Replace "YOUR_APP_ID_HERE" with the actual API key provided in your Lunary.ai dashboard.

import lunarylunary.set_config(app_id="YOUR_APP_ID_HERE")

With Lunary configured, you can now track various aspects of your app's performance. For instance, Let's take a look at the example below

from langchain_core.messages import HumanMessage, SystemMessage, AIMessagefrom langchain_openai import ChatOpenAIimport lunarylunary.set_config(app_id=YOUR_APP_ID_HERE)# Initialize the chat modelchat = ChatOpenAI(api_key=YOUR_API_KEY)# Define the system messagesystem_message = SystemMessage(content="You are a helpful assistant that provides travel advice.")# Initialize message list with system messagemessages = [system_message]# Trigger the chat model to generate the response@lunary.agent("travel_agent")def AIResponseAgent(messages):response = chat.invoke(messages)return response# Collect user input for the conversationwhile True:user_input = input("Enter your message (type 'exit' to end): ")if user_input.lower() == "exit":break# Add HumanMessage to the messages listmessages.append(HumanMessage(content=user_input))# Invoke the chat model with the current message historyresponse = AIResponseAgent(messages)# response = chat.invoke(messages)# # Print the AI's response and add it to the messages list# ai_response = response.contentprint("AI:", response.content)messages.append(AIMessage(content=response.content))# Inform the user that the conversation has endedprint("Conversation ended.")

And now let me show you my usage insights and the traces upon using the application

I can check the traces for each user request and also see the response that the model is generating. This helps me keep a track of how intuitive the response was. Apart from the traces of request and response, In your lunary dashboard you can also keep a watch on following

- The total number of runs of my application.

- Average LLM Latency of my application.

- Statistics about New Users and Active Users.

- Average User cost

And lot more...

With LangChain's HumanMessage and Lunary together, developers can build better AI applications and scale it according to the volume of the user responses which they receive. This combination helps improve how AI understands and responds to users. As your application grows, HumanMessage and Lunary will help create smarter and more reliable systems.

Vous construisez un produit IA ?

Lunary : open-source de surveillance GenAI, gestion de prompts, et magie.