Documentation

Getting Started

Integrations

JavaScript

Python

LangChain

API

Others

Features

Observability

Prompts

Threads

Evaluations

Radars

Users

Feedback

Tags

More

Security

Concepts

Self-hosting

Observability

Lunary has powerful observability features that lets you record and analyze your LLM calls.

There are 3 main observability features: analytics, logs and traces.

Analytics and logs are automatically captured as soon as you integrate our SDK.

Python

Learn how to install the Python SDK

JavaScript

Learn how to install the JS SDk

LangChain

Learn how to integrate with LangChain

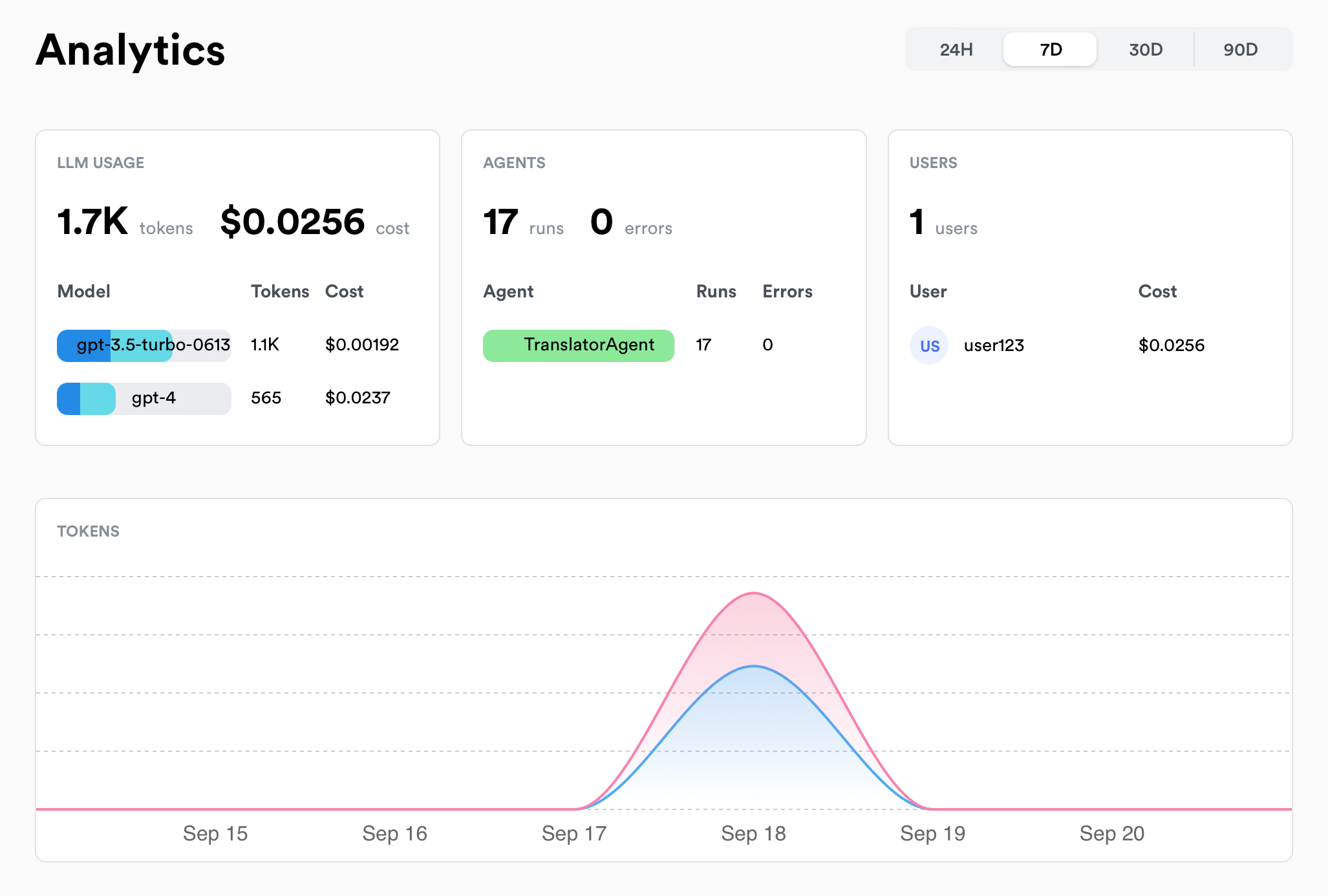

Analytics

The following metrics are currently automatically captured:

| Metric | Description |

|---|---|

| 💰 Costs | Costs incurred by your LLM models |

| 📊 Usage | Number of LLM calls made & tokens used |

| ⏱️ Latency | Average latency of LLM calls and agents |

| ❗ Errors | Number of errors encountered by LLM calls and agents |

| 👥 Users | Usage over time of your top users |

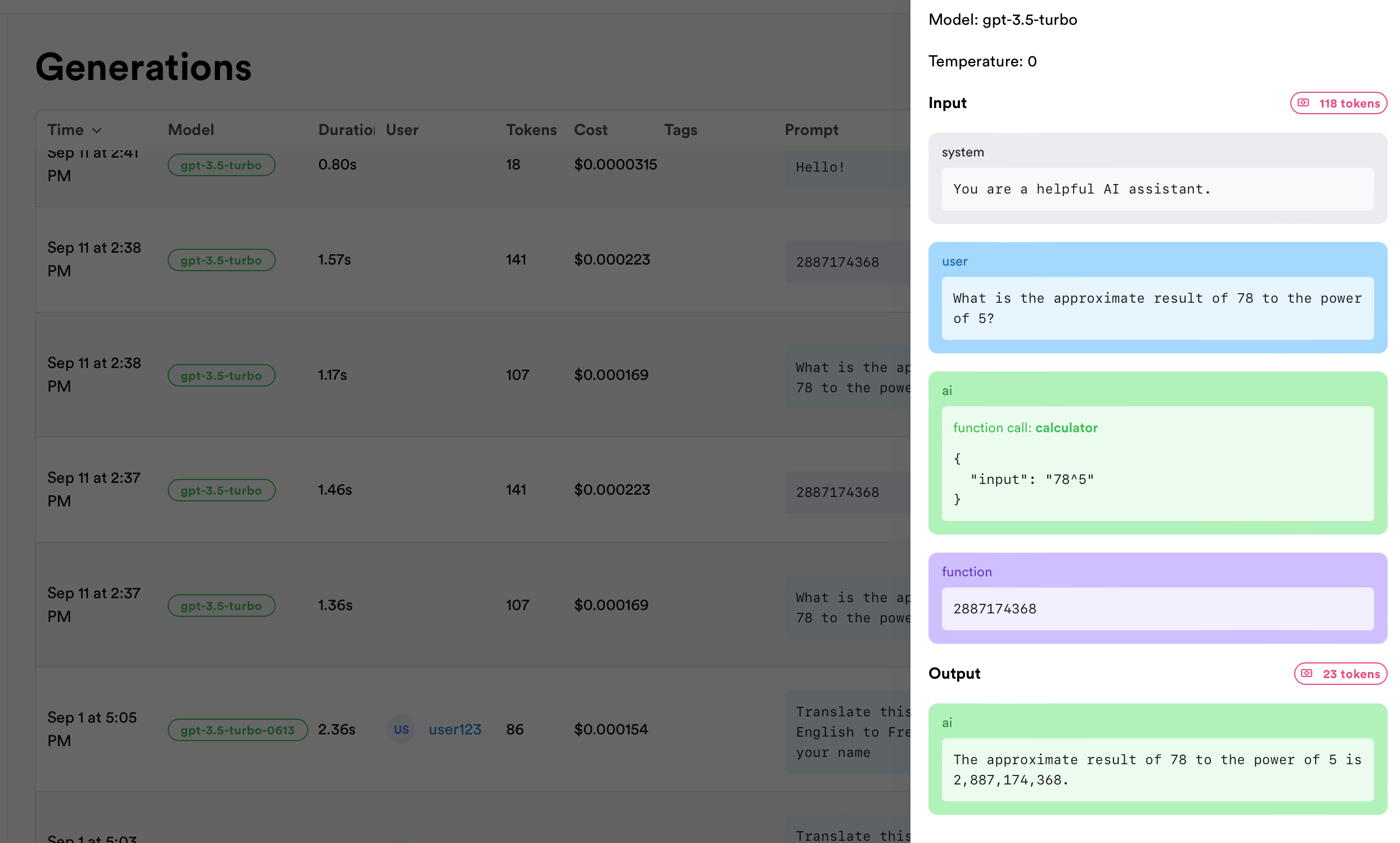

Logs

Lunary allows you to log and inspect your LLM requests and responses.

Logging is automatic as soon as you integrate our SDK.

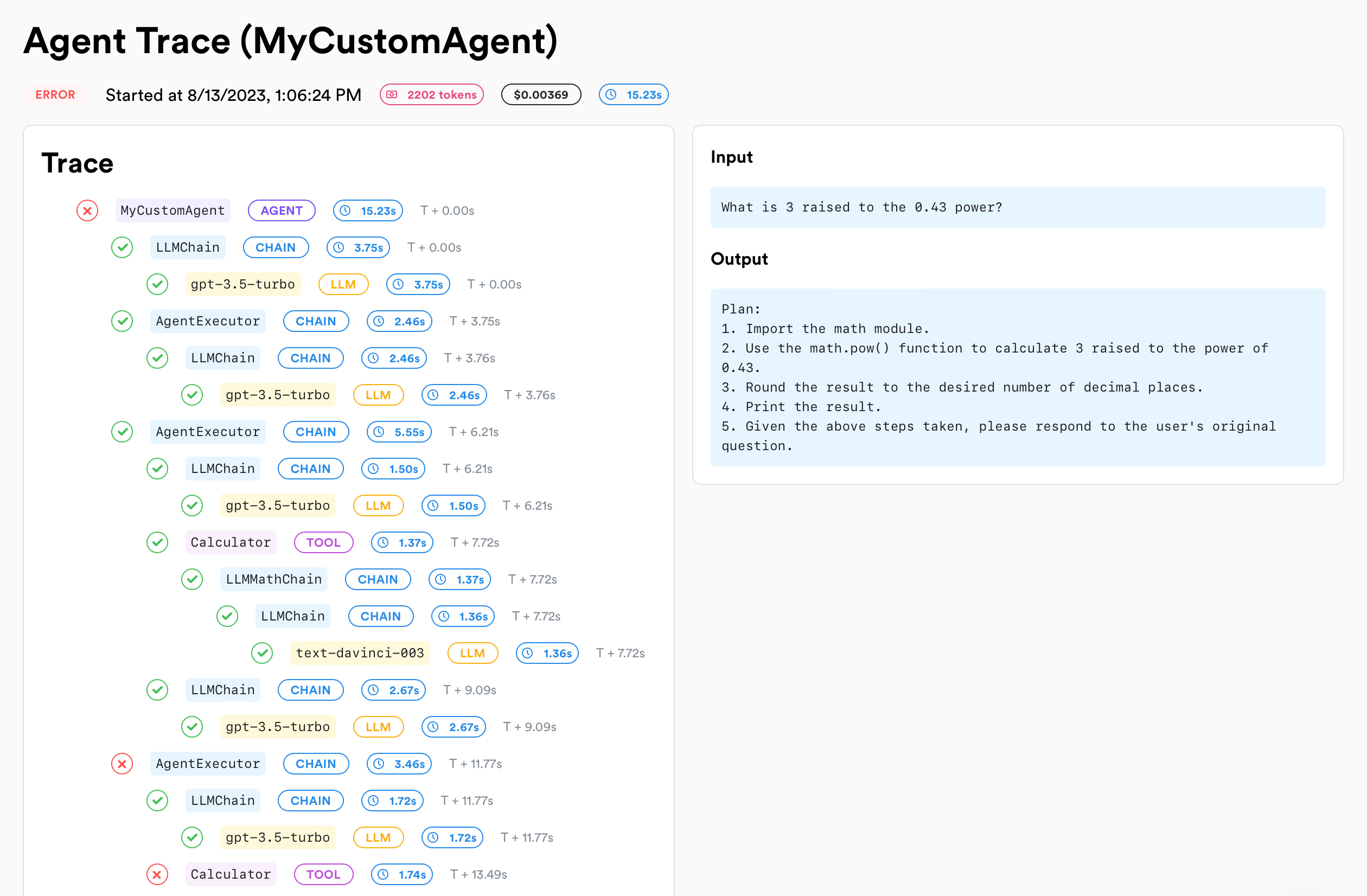

Tracing

Tracing is helpful to debug more complex AI agents and troubleshoot issues.

The easiest way to get started with traces is to use our utility wrappers to automatically track your agents and tools.

Wrapping Agents

By wrapping an agent, input, outputs and errors are automatically tracked.

Any query ran inside the agent will be tied to the agent.

import lunary@lunary.agent()def MyAgent(input): # Your agent custom logic # ...pass

Wrapping Tools

If your agents use tools, you can wrap them as well to track them.

If a wrapped tool is executed inside a wrapped agent, the tool will be automatically tied to the agent without the need to manually reconcialiate them.

import lunary@lunary.tool(name='MySuperTool')def MyTool(input): # Your tool custom logic # ...pass